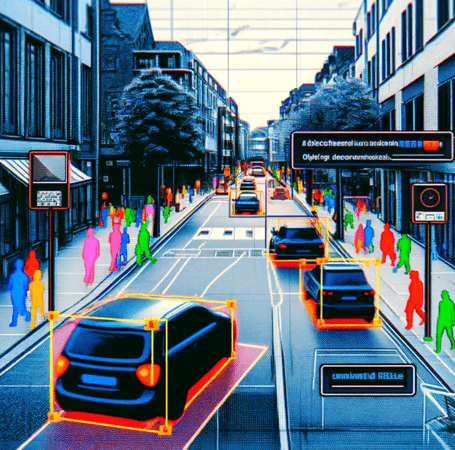

The way a Data Scientist tries to tame this problem is to use training data. They collect as much as they can afford (in time, money or capacity) and label it in ‘classes’. So, each picture is labelled correctly as a cat, helicopter, ice cream, etc. This is done thousands or millions of times. All of this training data is then fed into the AI and it provides an output. If the output is wrong, the AI is corrected and learns all over again, until it is able to regularly label cats, helicopters and ice creams. What’s happening in this process is that each of those inputs is filling out the ‘known’ Decision Space. At the end of the process, you have a trained AI with great accuracy and lightning quick decision-making times. It is amazing at identifying the things it was trained on.

But the issue here is that no matter how many pictures are gathered, they are still just a fraction of all the possible pictures of cats in different poses, locations, sizes, shapes, furriness, shaved (hopefully not), etc. The unknown Decision Space is still far, far more than the known Decision Space.