The reality of war today is that AI-driven autonomous systems are already in use. In Ukraine, older efforts used modified console controllers to pilot drones. Now, AI-enabled recognition systems are powering reconnaissance missions. Fallen soldiers are being remotely identified and triaged with behaviour recognition, and even enemy soldiers are being matched to social media data.

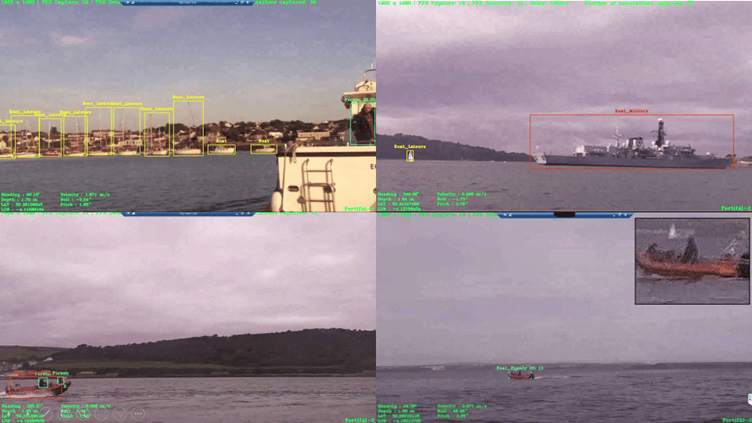

Image: an uncrewed Royal Navy test submarine automatically tracking vessels, fusing computer vision and sensors.

The Netherlands has gifted Ukraine with Seafox to autonomously detect mines; Palantir claims to have supplied Ukraine with AI solutions to improve targeting systems; and Zvook offers Ukraine a missile detection system that uses AI to monitor a network of acoustic sensors.

Closer to home, deep tech start-ups like Vizgard are focusing on developing autonomous capabilities. Vizgard have run tests on an uncrewed test submarine for DASA and showcased an unmanned ground and air vehicle intelligently coordinating as they hone in on a target.

Are we to believe uniform military standards of robustness are found across the array of systems provided by multiple service providers?

Alex Kehoe, CEO of Vizgard, captures the initiative private companies must take: “Now, AI companies worth their salt need to put mechanisms in place to ensure that their tech doesn't fall over when it matters most…the responsibility has shifted to private companies proving and quantifying the anticipated real-world performance."

The successful use of these AI-enabled tools signals AI is enhancing military effect already. But to whose robustness standards are these systems being built, and to what extent will they remain useable in a changing operational environment? Whilst the military does not enforce their own robustness standards, private military companies delivering AI-enabled military capability will be forced to set their own.

A lack of universal robustness means different tools come with distinct levels of built-in trust. This lack of discipline could cost the trustworthiness of AI tools in general.

One thing is for sure, the rise in autonomous military operations is a certainty and will be heavily influenced by the private companies involved. In lieu of a dedicated international military framework for AI, its best we review autonomous operations through the lens of self-benefit.