Sorting your holiday snaps

Artificial intelligence is changing the way we live. Mundane tasks that once would have been a boring afternoon of tedium can now be completed in minutes by a machine, whilst you get on with life. One example: organizing photographs. Few want to spend their Sunday’s sorting holiday snaps of family members from images of landscapes. Fortunately, there are now computer vision APIs freely available on the internet that can categorize your images for you, or perhaps be used by a business that offers that service to you. For us, that’s interesting as we specialise in defending AI systems. To defend, you have to be able to attack, so let’s have a look at one of commercial APIs.

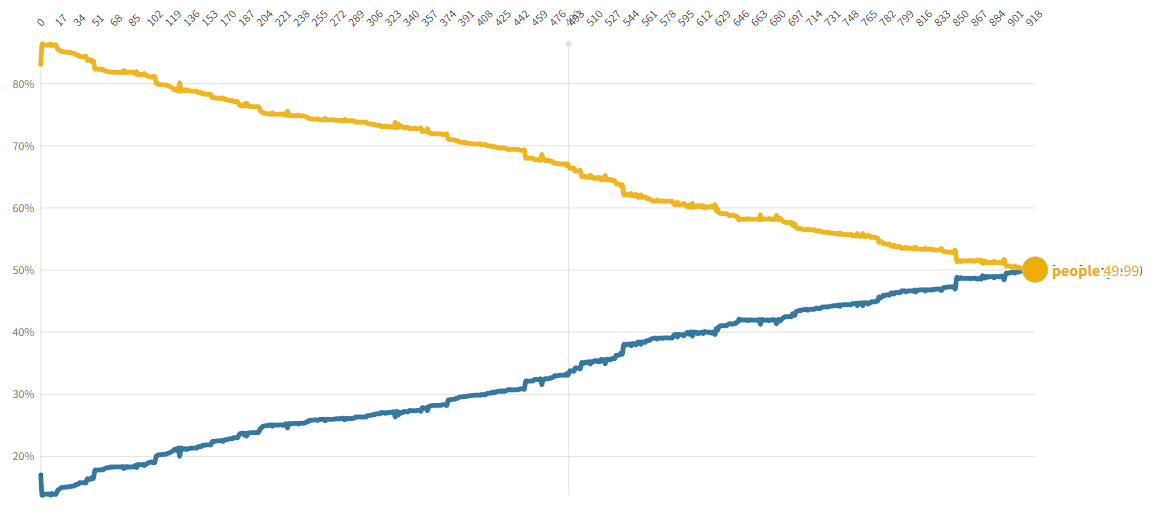

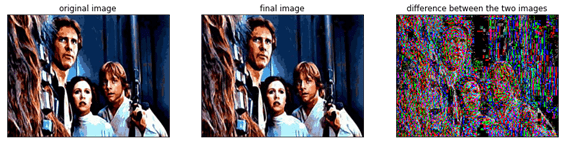

The computer science literature is filled with cases of AI systems being fooled into misclassifying images. These attacks work because it’s possible to add carefully calibrated noise to an image of, say, a cat, in a way that makes an AI system fail to recognize it, even though any human can still see that the image contains a cat. A subset of these attacks can work, even when we only have access to the inputs and outputs of a model, not the inner workings – a black box attack.