Welcome to ML Ops

Machine Learning (ML) and Artificial Intelligence (AI) is a new technology that is still finding its footing in the commercial sector. Although few systems are touted as a complete solution, there are many new AI/ML based companies that are capitalising on the benefits, and traditional business will need to follow suit.

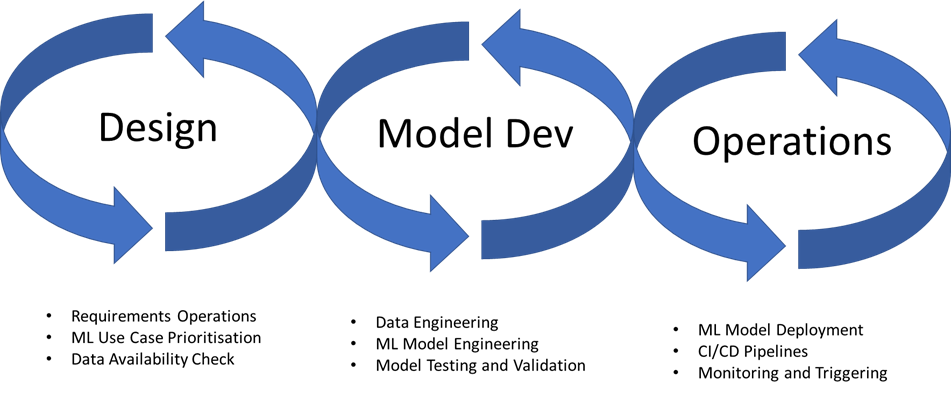

With the transition of AI and ML to the business and service sectors instead of purely research, a new development domain opened. This was the beginning of ML Ops (DevOps (Development Operations) for Machine Learning), which focuses on the Automation, Reproducibility, Validation, Deployment and Retraining of a machine learning model.