IT’S NOT JUST PICTURES OF CATS AND STICKERS ON STOP SIGNS

If you take an introduction to adversarial AI, you will likely be presented with a series of cases of neural networks not being quite as clever as many think they are. You’ll see images of cats misidentified as boats or a person with funny glasses being ignored by a facial recognition system. However, these adversarial examples cease being a sideshow curiosity when they align with nefarious intent.

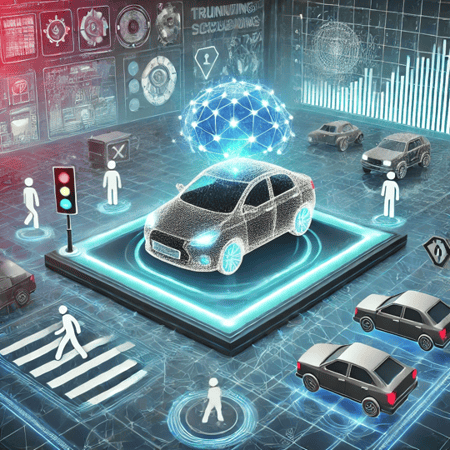

Adversarial attacks are flaws in the artificial reasoning of algorithms. These misalignments with human expectation have serious consequences if a driverless car fails to see a stop sign, or a police database fails to recognize the face of a perpetrator. The greater the incentive to fool an AI system, the more we need to build up defenses.

Within the commercial realm, the rewards for gaining an edge are no greater than in finance, where AI is increasingly taking over key aspects of trading strategy. High frequency trading has taken the human almost completely out of day-to-day decision making. Engineers set up trading algorithms to exploit fleeting inefficiencies in the market, often on the scale of micro-seconds. These systems are loaded with AI and are vulnerable to adversarial attacks that could seriously damage the interests of their owners.