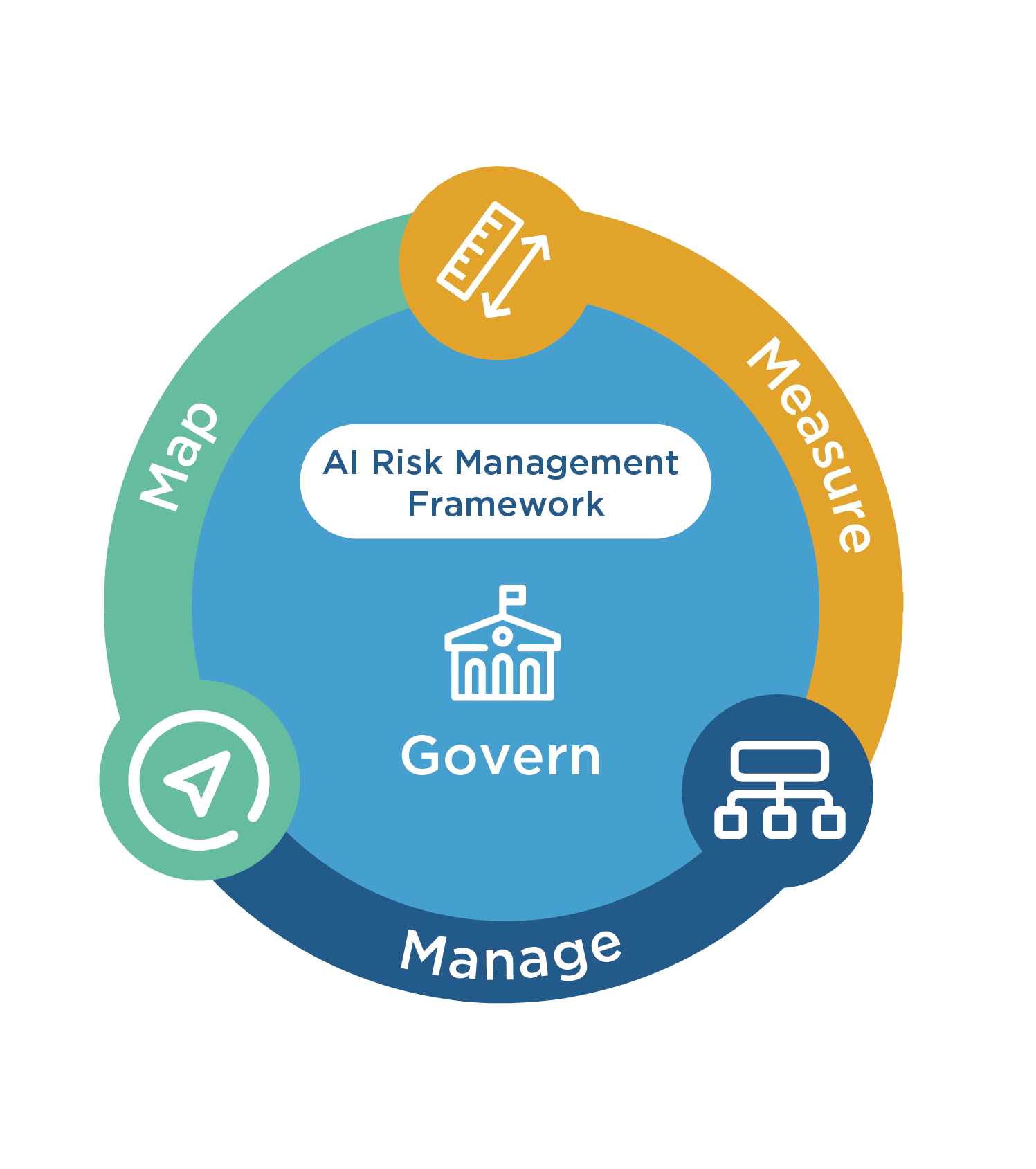

The AI RMF is intended to provide a resource to organizations designing, developing, deploying, or using AI systems to manage risks and promote trustworthy and responsible development and use of AI systems. Although compliance with the AI RMF is voluntary, given regulators’ increased scrutiny of AI, the AI RMF can help companies looking for practical tips on how to manage AI risks.

The AI RMF is divided into two parts: (I) Foundational Information and (II) Core and Profiles. Part I addresses how organizations should consider framing risks related to their AI systems, including:

- Understanding and addressing the risk, impact and harm that may be associated with AI systems.

- Addressing the challenges for AI risk management, including those related to third-party software, hardware and data.

- Incorporating a broad set of perspectives across the AI life cycle.