11 Sep 2024

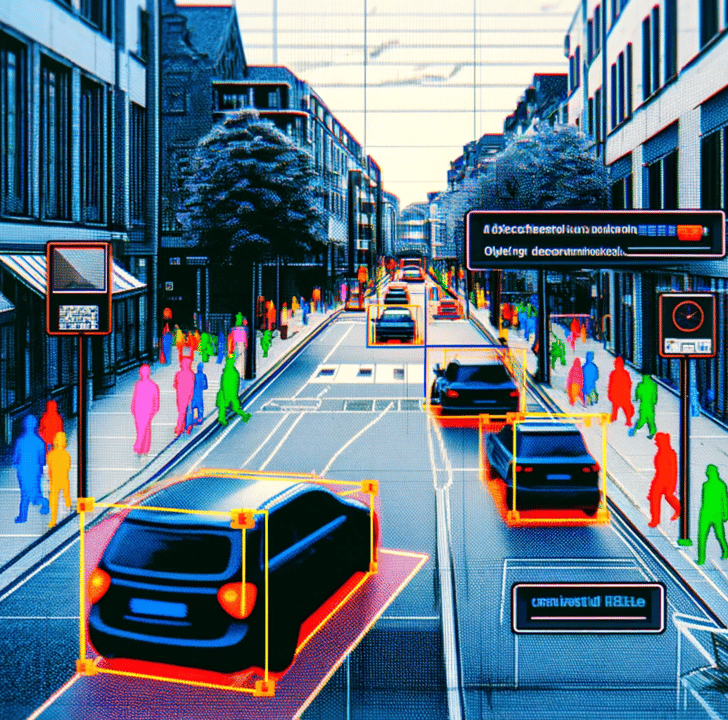

A Look at Advai’s Assurance Techniques as Listed on CDEI

In lieu of standardisation, it is up to the present-day adopters of #ArtificialIntelligence systems to do their best to select the most appropriate assurance methods themselves.

Here's an article about a few of our approaches, with some introductory commentary about the UK Government's drive to promote transparency across the #AISafety sector.